One of the animating goals of Earth Genome is to make direct interventions with environmental data in the world. Journalists provide a good gut check on this work. They care not for the statistics of machine learning test runs. They will not publish data that is only mostly correct. They need facts on the ground that are verifiable.

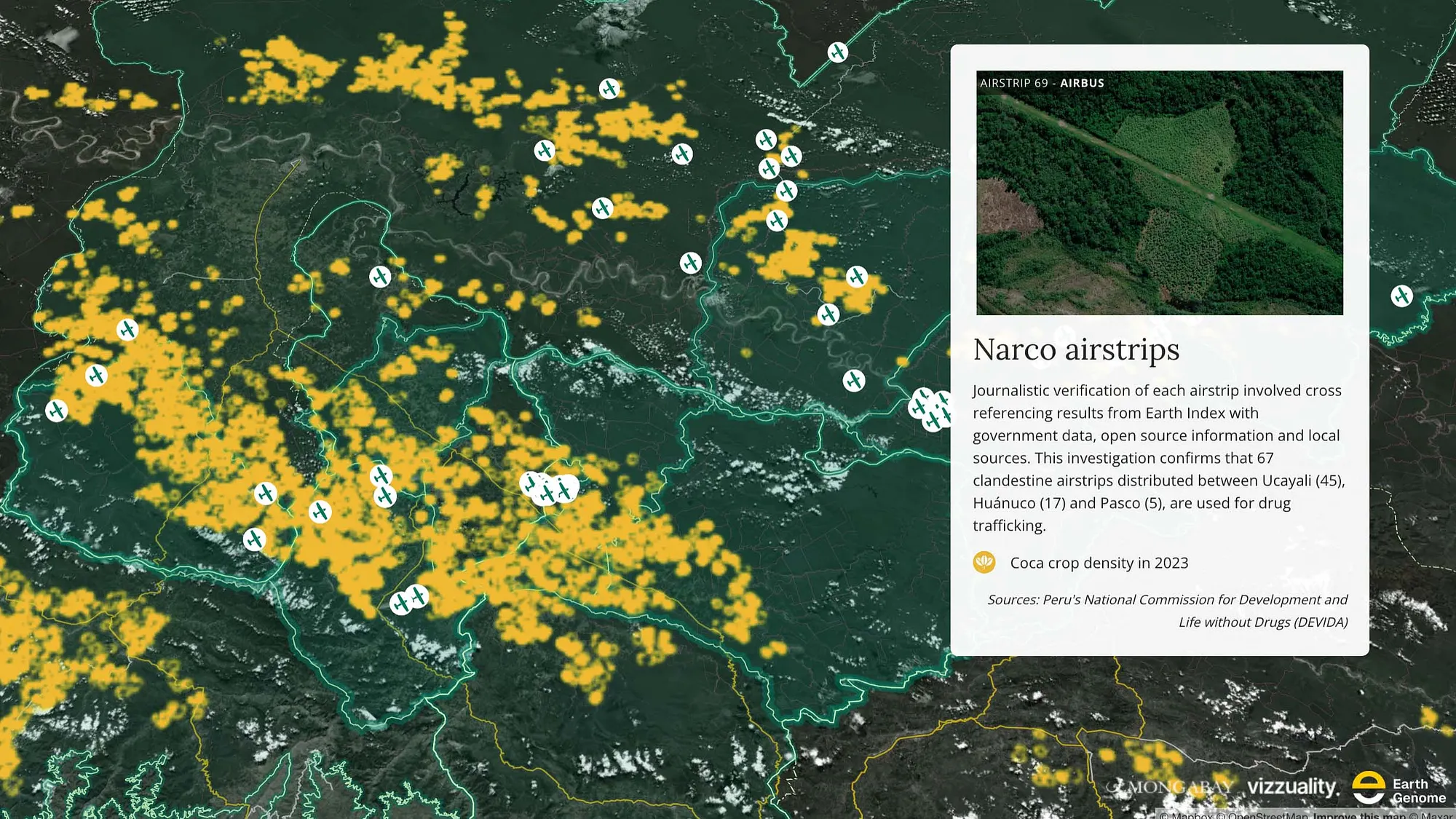

Last month Mongabay published a series of reports on the impacts of cocaine trafficking at its source in Peru: Narco airstrips beset Indigenous communities in Peruvian Amazon.

They documented rainforest lost to coca cultivation, the detritus of drug labs, airstrips being readied for shipments of cocaine hydrochloride or coca paste, and in nearby communities, accounts of changing culture, fear, and violence. Beauty pageants and drug overdoses. Recruitment to the cartels. Indigenous guards patrolling to repel the invaders. The deaths of 15 Indigenous leaders since the advent of covid.

The Kakataibo leader Mariano Isacama, who accompanied Mongabay on their field research, received anonymous threats after publicly condemning the traffickers. A search party of Kakataibo guards found him killed before the stories reached press.

Join Medium for free to get updates from this writer.

Mongabay editors reached out to us with the idea for the investigation. If we could find the airfields cut out of the rainforest for shipping drugs, they would know where cartels are most active. Finding them is hard. The airfields are rough-hewn from the forest to dirt tracks five meters wide and several hundred meters long. After a few shipments, the forest might be allowed to regrow until the track again is needed. The rainforest is vast. And at the same time, large parts of the forest have been destroyed for crop fields or ranches, whose long, straight dirt roads can masquerade as airfields. Given the size of the survey area, the job is akin to finding, on an overgrown football field, a handful of toothpicks.

In the end, we found 67 unauthorized airfields across Ucayali, Huánuco and Pasco states that Mongabay confirmed are in use in the illicit drug trade.

We applied Earth Index, our AI tool for parsing satellite imagery, to lead the search. Given a few examples of the beginnings or ends of airfields — sudden endpoints of tracks being most distinct — the tool retrieves from its vector catalog other lines of dirt among the trees and fields with a chance at being gangways for the shipment of drugs.

We check the detections. At this level, with only a few seed examples, we also get back roads and other scars in the forest. We adjust the labeling. We mark a few counterexamples, things for the AI to avoid, and re-search. Each time a different set of candidates comes back, and sometimes, on the banks of a river, or tucked deep in the forest, we spy the telltale signs of an airfield.

The AI gives an assist, while determinations belong to the human analyst. Sometimes we find airfields ourselves near to the places suggested by the AI. And on the other hand, we wouldn’t even know how to begin to canvas such a big area without the AI to focus our attention and tell us where to look.

By considering the historical development of a candidate airstrip in time-lapse imagery, its length and straightness, the proximity of roads, villages, and cultivated fields, data on the intensity of coca production, and distinctive features like tree topping along the line of flight approach, we can assure ourselves of an airfield ID with near certainty, and with enough confidence to share the finding with the journalists.

And here’s the point. The journalists used these detections to plan their reporting trips, where to go and who to talk to, how to keep themselves safe. They depended on the veracity of our work.

A lot of machine learning for remote sensing can feel like an academic exercise. Train a model up to, say, 70% precision; extrapolate the heck to some new geography; trumpet the scope of your geographic coverage; publish. (If I may add of this straw man I’m building, 70% precision means nearly 1 false detection for every 2 that are correct.)

This is fine when the goal is to push boundaries in algorithm development. It’s not great when someone is trying to work closely with the data outputs.

Gathering labeled data is tedious and time-consuming, even when, as in this case, we can pull some examples from OpenStreetMap or other open datasets. In remote sensing we very often try to work with poorly sampled datasets that are small, noisy, or biased. And for the same reason there is temptation to extrapolate models to new geographies on a hit-and-hope basis. At Earth Genome, we very often check all automated detections before surfacing data, and where that’s impractical, we try to run sampling studies of outputs to estimate real-world precision. Future users of the data will know what they are getting into, at least. (If anyone has better ideas for ex-post-facto validation, please give a shout.)

Our hope for this journalism is that it leads to support for Indigenous communities defending their lands and to better enforcement of the law, and from consumers of cocaine, some forbearance in the knowledge that the drug, and its halo of violence, comes from a specific place.

Other articles

.png)

How we made Amazon Mining Watch more accessible for reporting and enforcement

At COP30, our partners Amazon Conservation and Pulitzer Center launched the new version of Amazon Mining Watch.